OpenAI integration

Integrating OpenAI into your offers tremendous potential to enhance functionality, improve user experiences, and drive innovation. By leveraging the power of advanced AI models, you can create smarter, more responsive, and engaging apps that meet users' evolving needs. Whether building a new app or enhancing an existing one, OpenAI provides the tools and capabilities to transform your vision into reality.

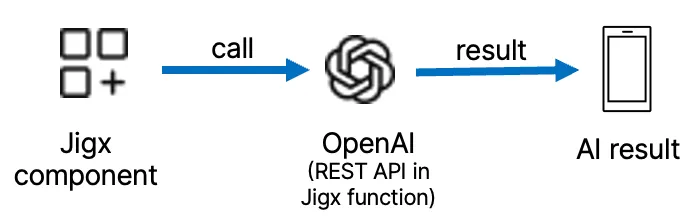

provides an integration layer allowing you to call any REST-based service. AI services such as ChatGPT provide a REST service. supports any AI service that is exposed to a REST service.

- Use your ChatGPT API key or AI model key to authorize the function calls.

- When integrating OpenAI's language models into your app, configure prompts to ensure optimal performance. Effective prompting can significantly influence the quality of responses generated by the AI.

A prompt is the input you provide the AI model to generate a response. This input can range from a simple question to a complex set of instructions. The effectiveness of the response largely depends on how well the prompt is crafted.

The ChatGPT REST API allows prompts in the JSON body parameter you send to the ChatGPT REST API. You can configure this in functions and the prompting tags are found within the JSON body under messages and content, as shown in the example below. Review the examples below and compare the bland and engaging prompt examples. Configuring these prompts correctly will significantly improve your outcomes.

- Show it in your Jigx mobile app.

- Open in and create a solution.

- Under the functions folder create a function.jigx file to configure the OpenAI REST API. For more information on configuring a REST function see REST Overview.

- Configure the prompts to tell AI what it should do and how it must act in the messages >"content:" property in the inputTransform in the functions file.

- Configure an action to call the OpenAI function. In most instances a sync-entities action is configured to return the reponse from the AI server.

functions are used to configure the REST integration with AI models such as ChatGPT. Once a function has been configured you can call it anywhere from your visual components. See the section on REST integration to understand how to use REST in . Below is an explanation describing how the parameters are configured to integrate and the AI model. It is important to refer to the AI model REST API you have selected to use, to ensure you have provided the required input and configured the output to return the correct response from the model.

Parameter | Description |

|---|---|

method | POST -The POST request can be used to send training data to the server where the AI model is hosted. The server can then use this data to train the model. |

url | Specify the URL for the AI model's REST API. For example, the URL for ChatGPT is: https://api.openai.com/v1/chat/completions |

outputTransform | The ouputTransform is where you configure the AI reponse. Check the AI API response to ensure you add the correct configuration. For example, OpenAPI response shows the following code: { "id": "chatcmpl-123", "object": "chat.completion", "created": 1677652288, "model": "gpt-3.5-turbo-0125", "system_fingerprint": "fp_44709d6fcb", "choices": [{ "index": 0, "message": { "role": "assistant", "content": "\n\nHello there, how may I assist you today?", }, "logprobs": null, "finish_reason": "stop" }], "usage": { "prompt_tokens": 9, "completion_tokens": 12, "total_tokens": 21 } } In the outputTransform you configure the following: outputTransform: | { "content": $.choices[0].message.content } |

inputTransform | Provide the AI with the information it requires to return the response/chat message. model - is the name and version of the AI model. response_format - the expected input type, for example, json_object. message - under this property configure the prompts to train the AI, by specifying the role and content. You can configure multiple sets. |

parameters | Configure authorization and authentication depending on the AI model you using. Configure the type, required and location of the parameters. |